To both of my loyal readers, I apologize for not writing anything in a while, but I have been absolutely slammed with classes and conference presentations. Anyway, I’ve been doing a lot of thinking about my earlier post about Teaching Data Science in English. The post provoked a decent response, mostly positive.

One reader sent me the following comment about my post which I’ve decided to quote (with permission) in its entirety because I think it accurately reflects why people get so frustrated when they try to learn mathematical concepts. What interested me was that this individual took action and “translated from mathspeak to English” and all of a sudden she was able to understand the underlying concepts.

Awhile ago I read a piece you had written on LinkedIn about making ‘mathspeak’ and ‘techspeak’ (i.e. coding) more accessible to regular people, by decreasing mathematical notation usage and increasing the use of real words in explanations of formulas and concepts. It was something that stayed with me because I’ve always understood broader mathematical concepts but have always had trouble with the mechanics, and I think a lot of that has had to do with the amount of notation used…math seems like a foreign language sometimes, and there are 2 levels of understanding: the first is merely deciphering the ‘foreign language’, which already puts me out of my comfort zone (think reading Spanish or French if you are a native English speaker) and then understand the underlying concepts, which becomes harder due to the fact that it’s written in a ‘non-native’ language. Recently I’ve started taking an online course in machine learning on XXX. Already in the second lesson, he dove straight into notation-filled formulas, and I was starting to get that overwhelmed feeling that I’m familiar with from previous years of math. But I had what you wrote in my mind, and I thought I’d give it a shot and manually ‘translate’ the formulas and equations into English, and stick with that. Well, I did that, and it worked so well. I feel that I am able to follow along with the underlying theory of the class and by extension, the formulas and algorithms he presented in ‘mathese’ whereas before I would have shut-down and assumed it was beyond my grasp. Thanks so much for highlighting this aspect of the math/English understanding divide. It is continuously helpful for me. (Emphasis mine)

I’d like to share another related story. One of my first paying jobs was working for KUAT public television as the web developer (www.kuat.org) and I wanted to do some things that required automating a data flow from an archaic DOS based database. I was teamed up with a programmer who helped me build the process and in doing so, I learned how to write regular expressions. I got so into it, I nearly automated myself out of a job.

Fast forward a year or so, when I was nearly done with my CS degree, I had to take an upper level CS course about Automata, Grammars and Languages, which included regular expressions in the course description. I was pretty excited because by this point, I had become a master at regular expressions and was looking forward to a class that I knew some of the material going in. Boy was I in for a shock. When we got to the regular expressions section, it degenerated into a plethora of Greek letters and assorted jargon to the point where I truly loathed going to class.

Theory Should Not Be Taught at the Expense of Application

What I also realized in that CS class was that most of my fellow students may have passed the tests, they did not have any clue how to use regular expressions in real life, or why you would want to use them in the first place. While we were spending time writing expressions that match ‘aaaaaaabababaaaaa‘ and drawing the automata that “implement” that, the knowledge of how to apply this to a real life problem, such as extracting data artifacts from raw data, was completely lost on the class.

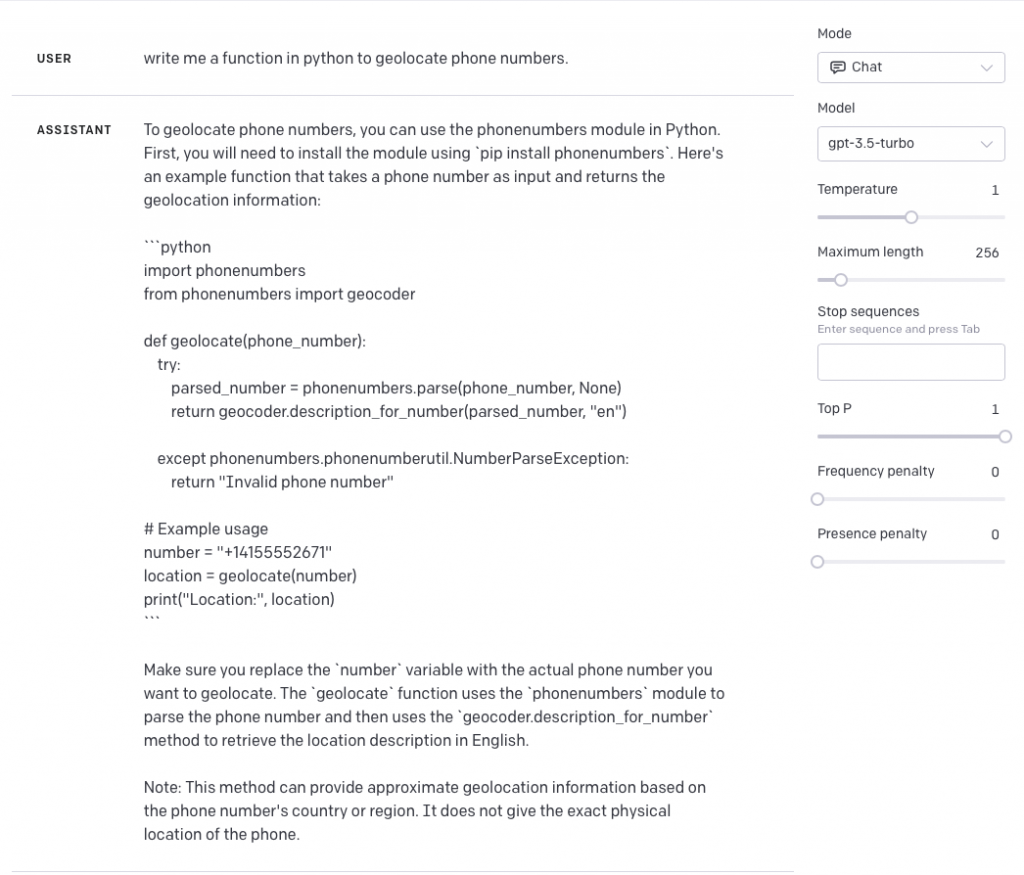

What if the instructor had started the class by showing us this:

pattern = '([a-zA-Z0-9_.]+)@([a-zA-Z0-9_.]+\.\w{2,3})'

matchObj = re.match( text, pattern )

if matchObj:

email = matchObj.groups(0)

account = matchObj.groups(1)

domain = matchObj.groups(2)

If you’re not familiar, this brief example in python-esque pseudo code demonstrates how to match, and extract email addresses, accounts and domains from text.

I don’t think I’m saying anything new here, but too many technical classes both in academia and out, spend a disproportionate amount of time on the underlying theory, whilst simultaneously ignoring, or downplaying the actual application of the concepts being taught. The result is that many students walk away frustrated, not understanding the actual use of what they are learning, and while professors and instructors may pat themselves on the back for preserving the “purity” of their curricula, I would argue that they have utterly failed in their task of educating their students.

The bottom line here, is that some people are really interested in theory, however for knowledge to be translated into something useful, students should be exposed early and often to a theory’s application and in conclusion, if you are designing some STEM training or a classes at a university don’t forget the importance of demonstrating how to apply the concepts you are teaching.

I spend most of my time now teaching others about data science and as such I do a lot of research into what is going on with respect to data science education. As such I decided to take an online machine learning course and it led me to a serious question: why don’t we use pseudo-code to teach math concepts?

I spend most of my time now teaching others about data science and as such I do a lot of research into what is going on with respect to data science education. As such I decided to take an online machine learning course and it led me to a serious question: why don’t we use pseudo-code to teach math concepts?