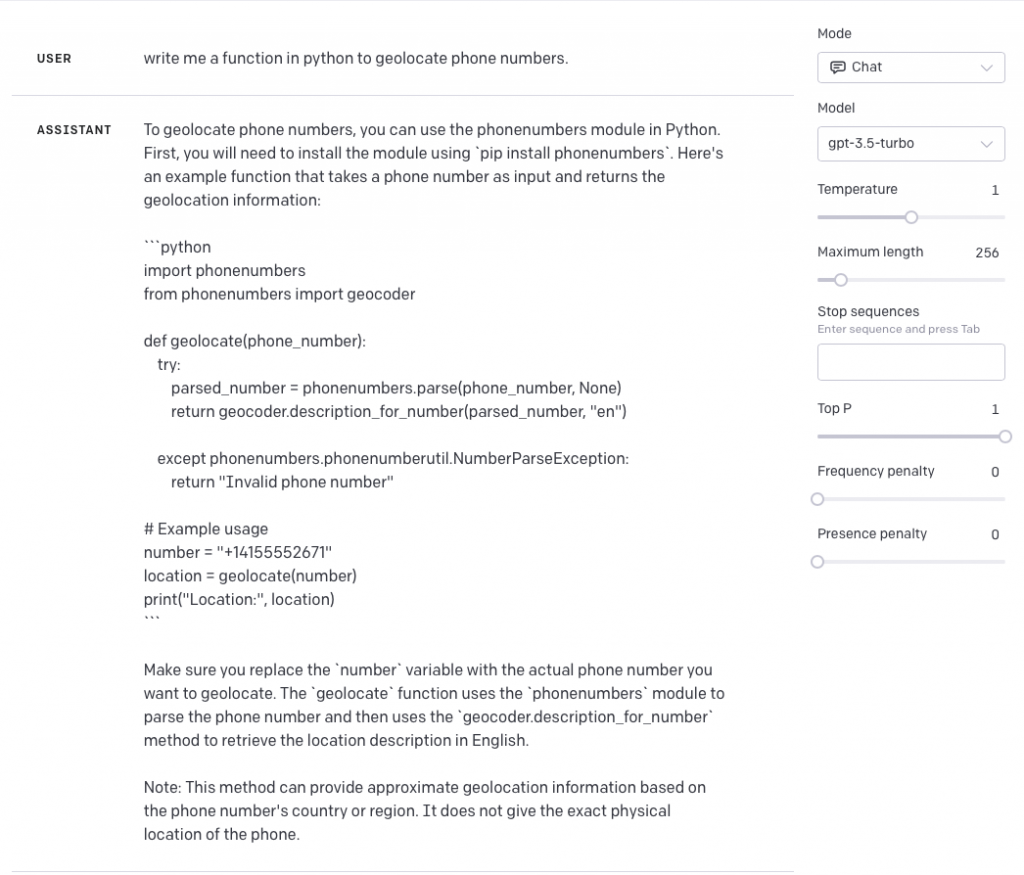

A lot of people have tried out ChatGPT and other LLMs for code their code writing abilities. My theory was that the LLMs would be really good at writing code to do things they’ve seen before, but not so good at things that were completely new. I started my experiments by asking ChatGPT to write me a function in python to geolocate a phone number. ChatGPT 3.5 did a relatively poor job of this, so I tried again using the playground and gpt-3.5-turbo. This time it was more successful.

That’s not bad. I like that it used the phonenumbers library rather than calling some external service.

Something Harder: A Drill UDF

Apache Drill, my favorite query engine, has an interface which allows users to define User Defined Functions (UDF) which are essentially custom functions. The interface is tricky to use, but once you’ve built UDFs it can be extremely powerful for data cleanup and other functionality. I thought I’d try this out to see if I could get GPT to generate an Apache Drill UDF to do the same thing.

Note: I’d actually done this already as a part of DataDistillr. The code is proprietary, however, I wanted to see how GPT would do compared with the code that I wrote. Here’s what gpt 3.5-turbo generated:

import org.apache.drill.exec.expr.fn.FunctionHolder;

import org.apache.drill.exec.expr.holders.VarCharHolder;

import org.apache.drill.exec.expr.annotations.FunctionTemplate;

import org.apache.drill.exec.expr.annotations.Output;

import org.apache.drill.exec.expr.annotations.Param;

import com.maxmind.geoip2.DatabaseReader;

import com.maxmind.geoip2.model.CityResponse;

import java.io.File;

import java.io.IOException;

import java.net.InetAddress;

@FunctionTemplate(

name = "geolocate_phone",

scope = FunctionTemplate.FunctionScope.SIMPLE,

nulls = FunctionTemplate.NullHandling.NULL_IF_NULL

)

public class GeoLocatePhoneNumbersUDF implements DrillSimpleFunc {

@Param

VarCharHolder phoneNumber;

@Output

VarCharHolder out;

private static final String GEOIP_DATABASE_PATH = "/path/to/GeoLite2-City.mmdb"; // Replace with the actual path to the GeoLite2 database

@Override

public void setup() {

}

@Override

public void eval() {

String phoneNumberStr = org.apache.drill.exec.expr.fn.impl.StringFunctionHelpers.toStringFromUTF8(phoneNumber.start, phoneNumber.end, phoneNumber.buffer);

try {

File database = new File(GEOIP_DATABASE_PATH);

DatabaseReader dbReader = new DatabaseReader.Builder(database).build();

CityResponse response = dbReader.city(InetAddress.getByName(phoneNumberStr));

String city = response.getCity().getName();

String countryCode = response.getCountry().getIsoCode();

String countryName = response.getCountry().getName();

String result = phoneNumberStr + " is located in " + city + ", " + countryCode + ", " + countryName;

byte[] bytes = result.getBytes();

out.buffer = buffer;

out.start = 0;

out.end = bytes.length;

buffer.setBytes(0, bytes);

} catch (IOException e) {

e.printStackTrace();

}

}

}

Wrong, Wrong, Wrong…

I was impressed that GPT was able to generate anything that remotely resembled a UDF. Now that I’ve said something positive… Let’s pick this apart

The first issue that I have is that the functionality is just plain wrong. This class does not geolocate phone numbers. It geolocates IP addresses, and we know this because it uses the MaxMind libraries to do so. The second issue that I have is with the code itself. This code demonstrates a really poor understanding of how this SDK actually works and if it were deployed, it would perform terribly.

The Drill SDK has two methods which must be implemented, setup() and eval(). Drill functions are meant to be run as part of a SQL query and as such the assumption is that they will be executed on collections rather than individual items. To make UDFs that are scalable, anything that only needs to happen once should be placed in the setup() method and anything that you do on each row, goes in the eval() method.

The issue with the GPT code is that it creates a new DatabaseReader object in the eval() method which means that for every function call, you get a new DatabaseReader. Same for the File object. This is horrendously inefficient and would not likely work for a large dataset.

Is This Stolen?

So here’s the thing. When I first saw this, I thought that this code looked remarkably familiar and the reason for that is that I actually wrote a Drill UDF that performs IP GeoLocation. (https://github.com/datadistillr/drill-geoip-functions) Mine actually works and is efficient.

With that said, my code is the only possible source for this as it is the only library out there that does IP Geolocation for Apache Drill. There are a few forks, but I’m fairly certain that my code is the original source. Additionally, in my repositories for this, my code has the Apache 2.0 license attached to each file and in the repo itself. This license allows redistribution but requires that the license be included with the code. The response from GPT did not include the original license.

What Am I Going Do?

Sadly, probably nothing. I’m not using this library to make money, but to me, it is yet another example of OSS being abused by a large company. All this does is make me question whether OSS is going to be viable if the AI providers are simply going to abuse copyrights and licenses.